Jumeau numérique pour la gestion prédictive des actifs

Defining the Scope and Objectives

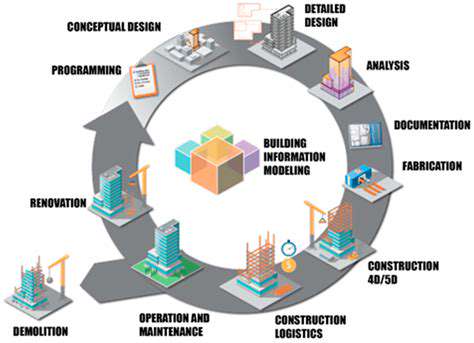

A crucial initial step in building a digital twin is clearly defining the scope and objectives. This involves identifying the specific aspects of the physical system you want to model, the desired level of detail, and the intended applications of the digital twin. Without a well-defined scope, the digital twin can quickly become overly complex and fail to deliver meaningful insights. This careful initial planning ensures that the model accurately reflects the key performance indicators and operational requirements of the real-world system.

Understanding the desired outcomes, such as improved efficiency, reduced downtime, or enhanced safety, is paramount. These objectives will guide the data collection process and the features included in the digital twin model.

Data Acquisition Strategies

Effective data collection is the bedrock of a robust digital twin. This involves identifying the relevant data sources, selecting appropriate sensors and instrumentation, and establishing robust data pipelines for seamless integration into the digital twin platform. Careful consideration must be given to data quality, ensuring accuracy, completeness, and consistency across all data streams.

Different methods, including sensor data from physical assets, operational logs, and historical records, must be considered and harmonized. This ensures a comprehensive understanding of the system's behavior and performance.

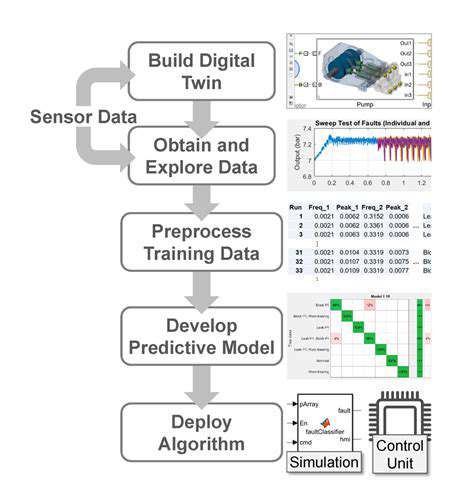

Sensor Integration and Data Preprocessing

Integrating data from various sensors and sources into the digital twin platform requires careful consideration. This process involves transforming raw data into a usable format, addressing inconsistencies, and handling missing or erroneous data points. Robust data preprocessing techniques are essential to ensure the accuracy and reliability of the model.

Cleaning and transforming the data into a consistent format is crucial for the model to function effectively. Standardizing units of measurement and addressing potential outliers are vital steps in this process.

Model Selection and Parameterization

Choosing the appropriate model architecture is a critical step in creating a digital twin. This decision depends on the complexity of the system being modeled and the desired level of accuracy. Model selection should be guided by factors like computational resources, data availability, and the need for real-time updates.

Once the model is selected, parameterization is essential. This involves calibrating the model using available data to ensure its accuracy and reliability in representing the real-world system. This step ensures the model behaves as expected and provides realistic results.

Validation and Verification

Validating and verifying the digital twin model is crucial to ensure its accuracy and reliability. This involves comparing the model's predictions with real-world data and adjusting the model parameters as needed. Regular validation and verification processes are essential to ensure the digital twin remains a faithful representation of the physical system over time.

Comparing model outputs with historical data and real-time observations is essential to detect discrepancies and fine-tune the model's parameters. This process ensures the digital twin remains a useful tool for decision-making and analysis.

Model Refinement and Updates

The digital twin is not a static entity; it requires continuous refinement and updates to maintain its accuracy and relevance. Regularly monitoring the model's performance and incorporating new data and insights from the physical system is vital. This ensures that the digital twin remains a precise representation of the real-world system, evolving with changes and providing accurate predictions.

Adapting the model to incorporate new data, sensor readings, or operational procedures is essential to ensure its ongoing relevance and accuracy. Ongoing monitoring and adjustments ensure the digital twin remains a valuable asset for the organization.

Real-time Monitoring and Analysis

Implementing real-time monitoring and analysis capabilities is crucial for leveraging the full potential of the digital twin. This allows for immediate insights into the system's performance, enabling proactive interventions and adjustments to optimize operations in real-time. This feature is critical for early detection of potential issues and for enabling dynamic adjustments to the physical system.

Integration with dashboards and reporting tools allows for easy access to key performance metrics and insights derived from the digital twin model. Real-time analysis enables informed decision-making and proactive management of the physical system.

- Comment choisir des meubles en bois pour votre salon ?

- Meilleurs meubles en bois pour les espaces de vie extérieurs

- Comment organiser votre maison avec des meubles en bois

- Comment utiliser les meubles en bois pour apporter la nature à l'intérieur

- Atteindre l'authenticité du produit grâce à une traçabilité avancée

- Gestion des données IoT pour les chaînes d'approvisionnement connectées et la logistique intelligente

- Logistique Durable : Stimuler l'impact environnemental grâce aux technologies de la chaîne d'approvisionnement

- 5G pour le transfert de données à haute vitesse entre les partenaires de la chaîne d'approvisionnement

- Le rôle de l'apprentissage profond dans les modèles prédictifs de la chaîne d'approvisionnement

- Personnaliser les services logistiques avec l'IA

- Gouvernance des données de la chaîne d'approvisionnement : Assurer la confiance, la conformité et l'intégrité des données

- Meilleures pratiques de sécurité des données pour les équipes de travail à distance